Complexity, Fractals, and Entropy#

Main#

complexity()#

- complexity(signal, which='makowski2022', delay=1, dimension=2, tolerance='sd', **kwargs)[source]#

Complexity and Chaos Analysis

Measuring the complexity of a signal refers to the quantification of various aspects related to concepts such as chaos, entropy, unpredictability, and fractal dimension.

Tip

We recommend checking our open-access review for an introduction to fractal physiology and its application in neuroscience.

There are many indices that have been developed and used to assess the complexity of signals, and all of them come with different specificities and limitations. While they should be used in an informed manner, it is also convenient to have a single function that can compute multiple indices at once.

The

nk.complexity()function can be used to compute a useful subset of complexity metrics and features. While this is great for exploratory analyses, we recommend running each function separately, to gain more control over the parameters and information that you get.Warning

The indices included in this function will be subjected to change in future versions, depending on what the literature suggests. We recommend using this function only for quick exploratory analyses, but then replacing it by the calls to the individual functions.

Check-out our open-access study explaining the selection of indices.

The categorization by “computation time” is based on our study results:

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

which (list) – What metrics to compute. Can be “makowski2022”.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as lag) in samples. See

complexity_delay()to estimate the optimal value for this parameter.dimension (int) – Embedding Dimension (m, sometimes referred to as d or order). See

complexity_dimension()to estimate the optimal value for this parameter.tolerance (float) – Tolerance (often denoted as r), distance to consider two data points as similar. If

"sd"(default), will be set to \(0.2 * SD_{signal}\). Seecomplexity_tolerance()to estimate the optimal value for this parameter.

- Returns:

df (pd.DataFrame) – A dataframe with one row containing the results for each metric as columns.

info (dict) – A dictionary containing additional information.

Examples

Example 1: Compute fast and medium-fast complexity metrics

In [1]: import neurokit2 as nk # Simulate a signal of 3 seconds In [2]: signal = nk.signal_simulate(duration=3, frequency=[5, 10]) # Compute selection of complexity metrics (Makowski et al., 2022) In [3]: df, info = nk.complexity(signal, which = "makowski2022") In [4]: df Out[4]: AttEn BubbEn CWPEn ... MFDFA_Width MSPEn SVDEn 0 0.519414 0.089258 0.000168 ... 0.905873 0.947385 0.164543 [1 rows x 15 columns]

Example 2: Compute complexity over time

In [5]: import numpy as np In [6]: import pandas as pd In [7]: import neurokit2 as nk # Create dynamically varying noise In [8]: amount_noise = nk.signal_simulate(duration=2, frequency=0.9) In [9]: amount_noise = nk.rescale(amount_noise, [0, 0.5]) In [10]: noise = np.random.uniform(0, 2, len(amount_noise)) * amount_noise # Add to simple signal In [11]: signal = noise + nk.signal_simulate(duration=2, frequency=5) In [12]: nk.signal_plot(signal, sampling_rate = 1000)

# Create function-wrappers that only return the index value In [13]: pfd = lambda x: nk.fractal_petrosian(x)[0] In [14]: kfd = lambda x: nk.fractal_katz(x)[0] In [15]: sfd = lambda x: nk.fractal_sevcik(x)[0] In [16]: svden = lambda x: nk.entropy_svd(x)[0] In [17]: fisher = lambda x: -1 * nk.fisher_information(x)[0] # FI is anticorrelated with complexity # Use them in a rolling window In [18]: rolling_kfd = pd.Series(signal).rolling(500, min_periods = 300, center=True).apply(kfd) In [19]: rolling_pfd = pd.Series(signal).rolling(500, min_periods = 300, center=True).apply(pfd) In [20]: rolling_sfd = pd.Series(signal).rolling(500, min_periods = 300, center=True).apply(sfd) In [21]: rolling_svden = pd.Series(signal).rolling(500, min_periods = 300, center=True).apply(svden) In [22]: rolling_fisher = pd.Series(signal).rolling(500, min_periods = 300, center=True).apply(fisher) In [23]: nk.signal_plot([signal, ....: rolling_kfd.values, ....: rolling_pfd.values, ....: rolling_sfd.values, ....: rolling_svden.values, ....: rolling_fisher], ....: labels = ["Signal", ....: "Petrosian Fractal Dimension", ....: "Katz Fractal Dimension", ....: "Sevcik Fractal Dimension", ....: "SVD Entropy", ....: "Fisher Information"], ....: sampling_rate = 1000, ....: standardize = True) ....:

References

Lau, Z. J., Pham, T., Chen, S. H. A., & Makowski, D. (2022). Brain entropy, fractal dimensions and predictability: A review of complexity measures for EEG in healthy and neuropsychiatric populations. European Journal of Neuroscience, 1-23.

Makowski, D., Te, A. S., Pham, T., Lau, Z. J., & Chen, S. H. (2022). The Structure of Chaos: An Empirical Comparison of Fractal Physiology Complexity Indices Using NeuroKit2. Entropy, 24 (8), 1036.

Parameters Choice#

complexity_delay()#

- complexity_delay(signal, delay_max=50, method='fraser1986', algorithm=None, show=False, silent=False, **kwargs)[source]#

Automated selection of the optimal Delay (Tau)

The time delay (Tau \(\tau\), also referred to as Lag) is one of the two critical parameters (the other being the

Dimensionm) involved in the construction of the time-delay embedding of a signal. It corresponds to the delay in samples between the original signal and its delayed version(s). In other words, how many samples do we consider between a given state of the signal and its closest past state.When \(\tau\) is smaller than the optimal theoretical value, consecutive coordinates of the system’s state are correlated and the attractor is not sufficiently unfolded. Conversely, when \(\tau\) is larger than it should be, successive coordinates are almost independent, resulting in an uncorrelated and unstructured cloud of points.

The selection of the parameters delay and

*dimension*is a challenge. One approach is to select them (semi) independently (as dimension selection often requires the delay), usingcomplexity_delay()andcomplexity_dimension(). However, some joint-estimation methods do exist, that attempt at finding the optimal delay and dimension at the same time.Note also that some authors (e.g., Rosenstein, 1994) suggest identifying the optimal embedding dimension first, and that the optimal delay value should then be considered as the optimal delay between the first and last delay coordinates (in other words, the actual delay should be the optimal delay divided by the optimal embedding dimension minus 1).

Several authors suggested different methods to guide the choice of the delay:

Fraser and Swinney (1986) suggest using the first local minimum of the mutual information between the delayed and non-delayed time series, effectively identifying a value of Tau for which they share the least information (and where the attractor is the least redundant). Unlike autocorrelation, mutual information takes into account also nonlinear correlations.

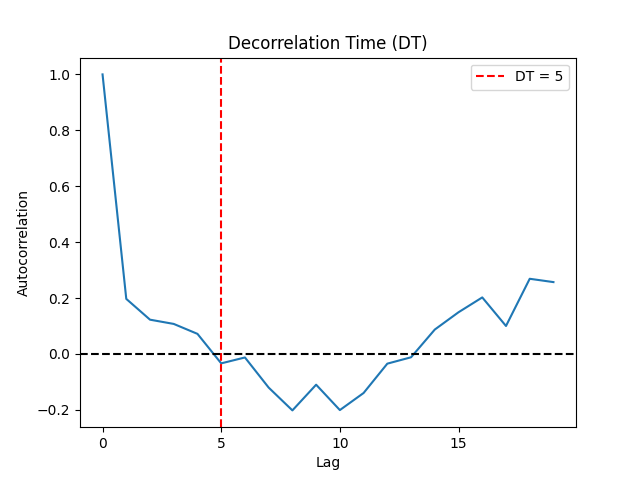

Theiler (1990) suggested to select Tau where the autocorrelation between the signal and its lagged version at Tau first crosses the value \(1/e\). The autocorrelation-based methods have the advantage of short computation times when calculated via the fast Fourier transform (FFT) algorithm.

Casdagli (1991) suggests instead taking the first zero-crossing of the autocorrelation.

Rosenstein (1993) suggests to approximate the point where the autocorrelation function drops to \((1 - 1/e)\) of its maximum value.

Rosenstein (1994) suggests to the point close to 40% of the slope of the average displacement from the diagonal (ADFD).

Kim (1999) suggests estimating Tau using the correlation integral, called the C-C method, which has shown to agree with those obtained using the Mutual Information. This method makes use of a statistic within the reconstructed phase space, rather than analyzing the temporal evolution of the time series. However, computation times are significantly long for this method due to the need to compare every unique pair of pairwise vectors within the embedded signal per delay.

Lyle (2021) describes the “Symmetric Projection Attractor Reconstruction” (SPAR), where \(1/3\) of the the dominant frequency (i.e., of the length of the average “cycle”) can be a suitable value for approximately periodic data, and makes the attractor sensitive to morphological changes. See also Aston’s talk. This method is also the fastest but might not be suitable for aperiodic signals. The

algorithmargument (default to"fft") and will be passed as themethodargument ofsignal_psd().

Joint-Methods for Delay and Dimension

Gautama (2003) mentions that in practice, it is common to have a fixed time lag and to adjust the embedding dimension accordingly. As this can lead to large m values (and thus to embedded data of a large size) and thus, slow processing, they describe an optimisation method to jointly determine m and \(\tau\), based on the entropy ratio.

Note

We would like to implement the joint-estimation by Matilla-García et al. (2021), please get in touch if you can help us!

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay_max (int) – The maximum time delay (Tau or lag) to test.

method (str) – The method that defines what to compute for each tested value of Tau. Can be one of

"fraser1986","theiler1990","casdagli1991","rosenstein1993","rosenstein1994","kim1999", or"lyle2021".algorithm (str) – The method used to find the optimal value of Tau given the values computed by the method. If

None(default), will select the algorithm according to the method. Modify only if you know what you are doing.show (bool) – If

True, will plot the metric values for each value of tau.silent (bool) – If

True, silence possible warnings.**kwargs (optional) – Additional arguments to be passed for C-C method.

- Returns:

delay (int) – Optimal time delay.

parameters (dict) – A dictionary containing additional information regarding the parameters used to compute optimal time-delay embedding.

Examples

Example 1: Comparison of different methods for estimating the optimal delay of an simple artificial signal.

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=10, sampling_rate=100, frequency=[1, 1.5], noise=0.02) In [3]: nk.signal_plot(signal)

In [4]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="fraser1986") ...:

In [5]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="theiler1990") ...:

In [6]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="casdagli1991") ...:

In [7]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="rosenstein1993") ...:

In [8]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="rosenstein1994") ...:

In [9]: delay, parameters = nk.complexity_delay(signal, delay_max=100, show=True, ...: method="lyle2021") ...:

Example 2: Optimizing the delay and the dimension using joint-estimation methods.

In [10]: delay, parameters = nk.complexity_delay( ....: signal, ....: delay_max=np.arange(1, 30, 1), # Can be an int or a list ....: dimension_max=20, # Can be an int or a list ....: method="gautama2003", ....: surrogate_n=5, # Number of surrogate signals to generate ....: surrogate_method="random", # can be IAAFT, see nk.signal_surrogate() ....: show=True) ....:

# Optimal dimension In [11]: dimension = parameters["Dimension"] In [12]: dimension Out[12]: np.int64(20)

Note: A double-checking of that method would be appreciated! Please help us improve.

Example 3: Using a realistic signal.

In [13]: ecg = nk.ecg_simulate(duration=60*6, sampling_rate=200) In [14]: signal = nk.ecg_rate(nk.ecg_peaks(ecg, sampling_rate=200), ....: sampling_rate=200, ....: desired_length=len(ecg)) ....: In [15]: nk.signal_plot(signal)

In [16]: delay, parameters = nk.complexity_delay(signal, delay_max=1000, show=True)

References

Lyle, J. V., Nandi, M., & Aston, P. J. (2021). Symmetric Projection Attractor Reconstruction: Sex Differences in the ECG. Frontiers in cardiovascular medicine, 1034.

Gautama, T., Mandic, D. P., & Van Hulle, M. M. (2003, April). A differential entropy based method for determining the optimal embedding parameters of a signal. In 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings. (ICASSP’03). (Vol. 6, pp. VI-29). IEEE.

Camplani, M., & Cannas, B. (2009). The role of the embedding dimension and time delay in time series forecasting. IFAC Proceedings Volumes, 42(7), 316-320.

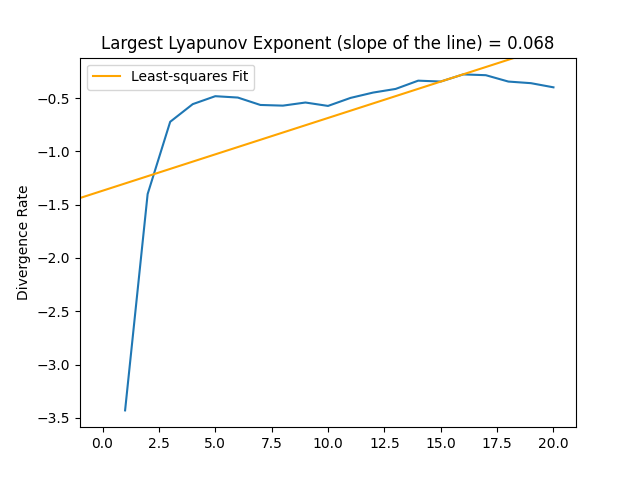

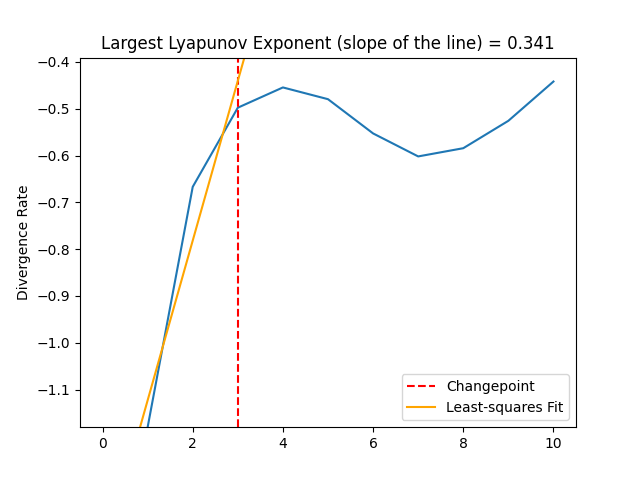

Rosenstein, M. T., Collins, J. J., & De Luca, C. J. (1993). A practical method for calculating largest Lyapunov exponents from small data sets. Physica D: Nonlinear Phenomena, 65(1-2), 117-134.

Rosenstein, M. T., Collins, J. J., & De Luca, C. J. (1994). Reconstruction expansion as a geometry-based framework for choosing proper delay times. Physica-Section D, 73(1), 82-98.

Kim, H., Eykholt, R., & Salas, J. D. (1999). Nonlinear dynamics, delay times, and embedding windows. Physica D: Nonlinear Phenomena, 127(1-2), 48-60.

Gautama, T., Mandic, D. P., & Van Hulle, M. M. (2003, April). A differential entropy based method for determining the optimal embedding parameters of a signal. In 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings. (ICASSP’03). (Vol. 6, pp. VI-29). IEEE.

Camplani, M., & Cannas, B. (2009). The role of the embedding dimension and time delay in time series forecasting. IFAC Proceedings Volumes, 42(7), 316-320.

complexity_dimension()#

- complexity_dimension(signal, delay=1, dimension_max=20, method='afnn', show=False, **kwargs)[source]#

Automated selection of the optimal Embedding Dimension (m)

The Embedding Dimension (m, sometimes referred to as d or order) is the second critical parameter (the first being the

delay\(\tau\)) involved in the construction of the time-delay embedding of a signal. It corresponds to the number of delayed states (versions of the signals lagged by \(\tau\)) that we include in the embedding.Though one can commonly find values of 2 or 3 used in practice, several authors suggested different numerical methods to guide the choice of m:

Correlation Dimension (CD): One of the earliest method to estimate the optimal m was to calculate the

correlation dimensionfor embeddings of various sizes and look for a saturation (i.e., a plateau) in its value as the embedding dimension increases. One of the limitation is that a saturation will also occur when there is not enough data to adequately fill the high-dimensional space (note that, in general, having such large embeddings that it significantly shortens the length of the signal is not recommended).FNN (False Nearest Neighbour): The method, introduced by Kennel et al. (1992), is based on the assumption that two points that are near to each other in the sufficient embedding dimension should remain close as the dimension increases. The algorithm checks the neighbours in increasing embedding dimensions until it finds only a negligible number of false neighbours when going from dimension \(m\) to \(m+1\). This corresponds to the lowest embedding dimension, which is presumed to give an unfolded space-state reconstruction. This method can fail in noisy signals due to the futile attempt of unfolding the noise (and in purely random signals, the amount of false neighbors does not substantially drops as m increases). The figure below show how projections to higher-dimensional spaces can be used to detect false nearest neighbours. For instance, the red and the yellow points are neighbours in the 1D space, but not in the 2D space.

AFN (Average False Neighbors): This modification by Cao (1997) of the FNN method addresses one of its main drawback, the need for a heuristic choice for the tolerance thresholds r. It uses the maximal Euclidian distance to represent nearest neighbors, and averages all ratios of the distance in \(m+1\) to \(m\) dimension and defines E1 and E2 as parameters. The optimal dimension corresponds to when E1 stops changing (reaches a plateau). E1 reaches a plateau at a dimension d0 if the signal comes from an attractor. Then d0*+1 is the optimal minimum embedding dimension. *E2 is a useful quantity to distinguish deterministic signals from stochastic signals. A constant E2 close to 1 for any embedding dimension d suggests random data, since the future values are independent of the past values.

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as Lag) in samples. See

complexity_delay()to choose the optimal value for this parameter.dimension_max (int) – The maximum embedding dimension to test.

method (str) – Can be

"afn"(Average False Neighbor),"fnn"(False Nearest Neighbour), or"cd"(Correlation Dimension).show (bool) – Visualize the result.

**kwargs – Other arguments, such as

R=10.0orA=2.0(relative and absolute tolerance, only for'fnn'method).

- Returns:

dimension (int) – Optimal embedding dimension.

parameters (dict) – A dictionary containing additional information regarding the parameters used to compute the optimal dimension.

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, frequency=[5, 7, 8], noise=0.01) # Correlation Dimension In [3]: optimal_dimension, info = nk.complexity_dimension(signal, ...: delay=20, ...: dimension_max=10, ...: method='cd', ...: show=True) ...:

# FNN In [4]: optimal_dimension, info = nk.complexity_dimension(signal, ...: delay=20, ...: dimension_max=20, ...: method='fnn', ...: show=True) ...:

# AFNN In [5]: optimal_dimension, info = nk.complexity_dimension(signal, ...: delay=20, ...: dimension_max=20, ...: method='afnn', ...: show=True) ...:

References

Kennel, M. B., Brown, R., & Abarbanel, H. D. (1992). Determining embedding dimension for phase-space reconstruction using a geometrical construction. Physical review A, 45(6), 3403.

Cao, L. (1997). Practical method for determining the minimum embedding dimension of a scalar time series. Physica D: Nonlinear Phenomena, 110(1-2), 43-50.

Rhodes, C., & Morari, M. (1997). The false nearest neighbors algorithm: An overview. Computers & Chemical Engineering, 21, S1149-S1154.

Krakovská, A., Mezeiová, K., & Budáčová, H. (2015). Use of false nearest neighbours for selecting variables and embedding parameters for state space reconstruction. Journal of Complex Systems, 2015.

Gautama, T., Mandic, D. P., & Van Hulle, M. M. (2003, April). A differential entropy based method for determining the optimal embedding parameters of a signal. In 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings. (ICASSP’03). (Vol. 6, pp. VI-29). IEEE.

complexity_tolerance()#

- complexity_tolerance(signal, method='maxApEn', r_range=None, delay=None, dimension=None, show=False)[source]#

Automated selection of tolerance (r)

Estimate and select the optimal tolerance (r) parameter used by other entropy and other complexity algorithms.

Many complexity algorithms are built on the notion of self-similarity and recurrence, and how often a system revisits its past states. Considering two states as identical is straightforward for discrete systems (e.g., a sequence of

"A","B"and"C"states), but for continuous signals, we cannot simply look for when the two numbers are exactly the same. Instead, we have to pick a threshold by which to consider two points as similar.The tolerance r is essentially this threshold value (the numerical difference between two similar points that we “tolerate”). This parameter has a critical impact and is a major source of inconsistencies in the literature.

Different methods have been described to estimate the most appropriate tolerance value:

maxApEn: Different values of tolerance will be tested and the one where the approximate entropy (ApEn) is maximized will be selected and returned (Chen, 2008).

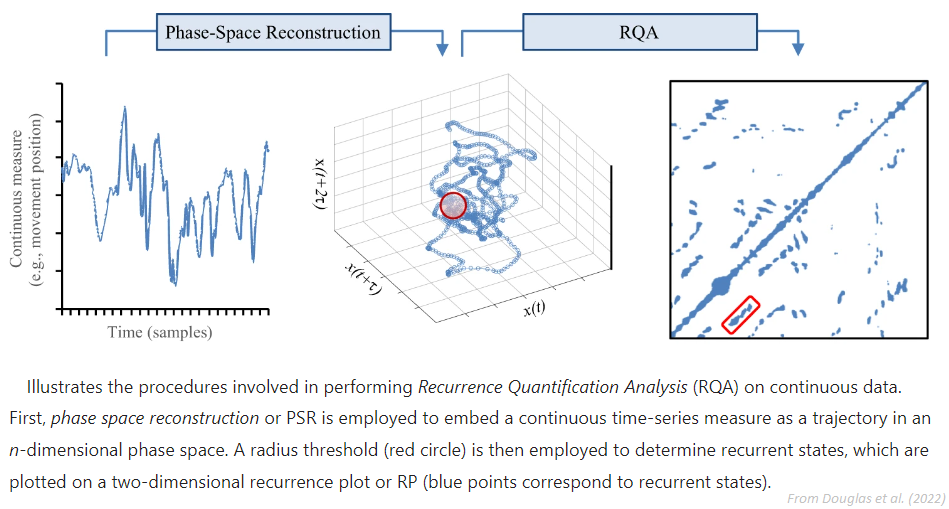

recurrence: The tolerance that yields a recurrence rate (see

RQA) close to 1% will be returned. Note that this method is currently not suited for very long signals, as it is based on a recurrence matrix, which size is close to n^2. Help is needed to address this limitation.neighbours: The tolerance that yields a number of nearest neighbours (NN) close to 2% will be returned.

As these methods are computationally expensive, other fast heuristics are available:

sd: r = 0.2 * standard deviation (SD) of the signal will be returned. This is the most commonly used value in the literature, though its appropriateness is questionable.

makowski: Adjusted value based on the SD, the embedding dimension and the signal’s length. See our study.

nolds: Adjusted value based on the SD and the dimension. The rationale is that the chebyshev distance (used in various metrics) rises logarithmically with increasing dimension.

0.5627 * np.log(dimension) + 1.3334is the logarithmic trend line for the chebyshev distance of vectors sampled from a univariate normal distribution. A constant of0.1164is used so thattolerance = 0.2 * SDsfordimension = 2(originally in CSchoel/nolds).singh2016: Makes a histogram of the Chebyshev distance matrix and returns the upper bound of the modal bin.

chon2009: Acknowledging that computing multiple ApEns is computationally expensive, Chon (2009) suggested an approximation based a heuristic algorithm that takes into account the length of the signal, its short-term and long-term variability, and the embedding dimension m. Initially defined only for m in [2-7], we expanded this to work with value of m (though the accuracy is not guaranteed beyond m = 4).

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

method (str) – Can be

"maxApEn"(default),"sd","recurrence","neighbours","nolds","chon2009", or"neurokit".r_range (Union[list, int]) – The range of tolerance values (or the number of values) to test. Only used if

methodis"maxApEn"or"recurrence". IfNone(default), the default range will be used;np.linspace(0.02, 0.8, r_range) * np.std(signal, ddof=1)for"maxApEn", andnp. linspace(0, np.max(d), 30 + 1)[1:]for"recurrence". You can set a lower number for faster results.delay (int) – Only used if

method="maxApEn". Seeentropy_approximate().dimension (int) – Only used if

method="maxApEn". Seeentropy_approximate().show (bool) – If

Trueand method is"maxApEn", will plot the ApEn values for each value of r.

- Returns:

float – The optimal tolerance value.

dict – A dictionary containing additional information.

Examples

Example 1: The method based on the SD of the signal is fast. The plot shows the d distribution of the values making the signal, and the width of the arrow represents the chosen

rparameter.

In [1]: import neurokit2 as nk # Simulate signal In [2]: signal = nk.signal_simulate(duration=2, frequency=[5, 7, 9, 12, 15]) # Fast method (based on the standard deviation) In [3]: r, info = nk.complexity_tolerance(signal, method = "sd", show=True)

In [4]: r Out[4]: np.float64(0.1581534263085267)

The dimension can be taken into account: .. ipython:: python

# nolds method @savefig p_complexity_tolerance2.png scale=100% r, info = nk.complexity_tolerance(signal, method = “nolds”, dimension=3, show=True) @suppress plt.close()

In [5]: r Out[5]: np.float64(0.1581534263085267)

Example 2: The method based on the recurrence rate will display the rates according to different values of tolerance. The horizontal line indicates 5%.

In [6]: r, info = nk.complexity_tolerance(signal, delay=1, dimension=10, ...: method = 'recurrence', show=True) ...:

In [7]: r Out[7]: np.float64(0.2662934154435781)

An alternative, better suited for long signals is to use nearest neighbours.

In [8]: r, info = nk.complexity_tolerance(signal, delay=1, dimension=10, ...: method = 'neighbours', show=True) ...:

Another option is to use the density of distances.

In [9]: r, info = nk.complexity_tolerance(signal, delay=1, dimension=3, ...: method = 'bin', show=True) ...:

Example 3: The default method selects the tolerance at which ApEn is maximized.

# Slow method In [10]: r, info = nk.complexity_tolerance(signal, delay=8, dimension=6, ....: method = 'maxApEn', show=True) ....:

In [11]: r Out[11]: np.float64(0.4902756215564327)

Example 4: The tolerance values that are tested can be modified to get a more precise estimate.

# Narrower range In [12]: r, info = nk.complexity_tolerance(signal, delay=8, dimension=6, method = 'maxApEn', ....: r_range=np.linspace(0.002, 0.8, 30), show=True) ....:

In [13]: r Out[13]: np.float64(0.4973103448275862)

References

Chon, K. H., Scully, C. G., & Lu, S. (2009). Approximate entropy for all signals. IEEE engineering in medicine and biology magazine, 28(6), 18-23.

Lu, S., Chen, X., Kanters, J. K., Solomon, I. C., & Chon, K. H. (2008). Automatic selection of the threshold value r for approximate entropy. IEEE Transactions on Biomedical Engineering, 55(8), 1966-1972.

Chen, X., Solomon, I. C., & Chon, K. H. (2008). Parameter selection criteria in approximate entropy and sample entropy with application to neural respiratory signals. Am. J. Physiol. Regul. Integr. Comp. Physiol.

Singh, A., Saini, B. S., & Singh, D. (2016). An alternative approach to approximate entropy threshold value (r) selection: application to heart rate variability and systolic blood pressure variability under postural challenge. Medical & biological engineering & computing, 54(5), 723-732.

complexity_k()#

- complexity_k(signal, k_max='max', show=False)[source]#

Automated selection of k for Higuchi Fractal Dimension (HFD)

The optimal k-max is computed based on the point at which HFD values plateau for a range of k-max values (see Vega, 2015).

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

k_max (Union[int, str, list], optional) – Maximum number of interval times (should be greater than or equal to 3) to be tested. If

max, it selects the maximum possible value corresponding to half the length of the signal.show (bool) – Visualise the slope of the curve for the selected kmax value.

- Returns:

k (float) – The optimal kmax of the time series.

info (dict) – A dictionary containing additional information regarding the parameters used to compute optimal kmax.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, sampling_rate=100, frequency=[5, 6], noise=0.5) In [3]: k_max, info = nk.complexity_k(signal, k_max='default', show=True)

In [4]: k_max Out[4]: np.int64(21)

References

Higuchi, T. (1988). Approach to an irregular time series on the basis of the fractal theory. Physica D: Nonlinear Phenomena, 31(2), 277-283.

Vega, C. F., & Noel, J. (2015, June). Parameters analyzed of Higuchi’s fractal dimension for EEG brain signals. In 2015 Signal Processing Symposium (SPSympo) (pp. 1-5). IEEE. https:// ieeexplore.ieee.org/document/7168285

Fractal Dimension#

fractal_katz()#

- fractal_katz(signal)[source]#

Katz’s Fractal Dimension (KFD)

Computes Katz’s Fractal Dimension (KFD). The euclidean distances between successive points in the signal are summed and averaged, and the maximum distance between the starting point and any other point in the sample.

Fractal dimensions range from 1.0 for straight lines, through approximately 1.15 for random-walks, to approaching 1.5 for the most convoluted waveforms.

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

- Returns:

kfd (float) – Katz’s fractal dimension of the single time series.

info (dict) – A dictionary containing additional information (currently empty, but returned nonetheless for consistency with other functions).

See also

Examples

Step 1. Simulate different kinds of signals

In [1]: import neurokit2 as nk In [2]: import numpy as np # Simulate straight line In [3]: straight = np.linspace(-1, 1, 2000) # Simulate random In [4]: random = nk.complexity_simulate(duration=2, method="randomwalk") In [5]: random = nk.rescale(random, [-1, 1]) # Simulate simple In [6]: simple = nk.signal_simulate(duration=2, frequency=[5, 10]) # Simulate complex In [7]: complex = nk.signal_simulate(duration=2, ...: frequency=[1, 3, 6, 12], ...: noise = 0.1) ...: In [8]: nk.signal_plot([straight, random, simple, complex])

Step 2. Compute KFD for each of them

In [9]: KFD, _ = nk.fractal_katz(straight) In [10]: KFD Out[10]: np.float64(1.0) In [11]: KFD, _ = nk.fractal_katz(random) In [12]: KFD Out[12]: np.float64(2.067918829049598) In [13]: KFD, _ = nk.fractal_katz(simple) In [14]: KFD Out[14]: np.float64(2.0418574763920256) In [15]: KFD, _ = nk.fractal_katz(complex) In [16]: KFD Out[16]: np.float64(4.027396828348657)

References

Katz, M. J. (1988). Fractals and the analysis of waveforms. Computers in Biology and Medicine, 18(3), 145-156. doi:10.1016/0010-4825(88)90041-8.

fractal_linelength()#

- fractal_linelength(signal)[source]#

Line Length (LL)

Line Length (LL, also known as curve length), stems from a modification of the

Katz fractal dimensionalgorithm, with the goal of making it more efficient and accurate (especially for seizure onset detection).It basically corresponds to the average of the absolute consecutive differences of the signal, and was made to be used within subwindows. Note that this does not technically measure the fractal dimension, but the function was named with the

fractal_prefix due to its conceptual similarity with Katz’s fractal dimension.- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

- Returns:

float – Line Length.

dict – A dictionary containing additional information (currently empty, but returned nonetheless for consistency with other functions).

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, sampling_rate=200, frequency=[5, 6, 10]) In [3]: ll, _ = nk.fractal_linelength(signal) In [4]: ll Out[4]: np.float64(0.11575342908508135)

References

Esteller, R., Echauz, J., Tcheng, T., Litt, B., & Pless, B. (2001, October). Line length: an efficient feature for seizure onset detection. In 2001 Conference Proceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Vol. 2, pp. 1707-1710). IEEE.

fractal_petrosian()#

- fractal_petrosian(signal, symbolize='C', show=False)[source]#

Petrosian fractal dimension (PFD)

Petrosian (1995) proposed a fast method to estimate the fractal dimension by converting the signal into a binary sequence from which the fractal dimension is estimated. Several variations of the algorithm exist (e.g.,

"A","B","C"or"D"), primarily differing in the way the discrete (symbolic) sequence is created (see func:complexity_symbolize for details). The most common method ("C", by default) binarizes the signal by the sign of consecutive differences.\[\frac{log(N)}{log(N) + log(\frac{N}{N+0.4N_{\delta}})}\]Most of these methods assume that the signal is periodic (without a linear trend). Linear detrending might be useful to eliminate linear trends (see

signal_detrend()).See also

information_mutual,entropy_svd- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

symbolize (str) – Method to convert a continuous signal input into a symbolic (discrete) signal. By default, assigns 0 and 1 to values below and above the mean. Can be

Noneto skip the process (in case the input is already discrete). Seecomplexity_symbolize()for details.show (bool) – If

True, will show the discrete the signal.

- Returns:

pfd (float) – The petrosian fractal dimension (PFD).

info (dict) – A dictionary containing additional information regarding the parameters used to compute PFD.

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, frequency=[5, 12]) In [3]: pfd, info = nk.fractal_petrosian(signal, symbolize = "C", show=True)

In [4]: pfd Out[4]: np.float64(1.0012592763505226) In [5]: info Out[5]: {'Symbolization': 'C'}

References

Esteller, R., Vachtsevanos, G., Echauz, J., & Litt, B. (2001). A comparison of waveform fractal dimension algorithms. IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications, 48(2), 177-183.

Petrosian, A. (1995, June). Kolmogorov complexity of finite sequences and recognition of different preictal EEG patterns. In Proceedings eighth IEEE symposium on computer-based medical systems (pp. 212-217). IEEE.

Kumar, D. K., Arjunan, S. P., & Aliahmad, B. (2017). Fractals: applications in biological Signalling and image processing. CRC Press.

Goh, C., Hamadicharef, B., Henderson, G., & Ifeachor, E. (2005, June). Comparison of fractal dimension algorithms for the computation of EEG biomarkers for dementia. In 2nd International Conference on Computational Intelligence in Medicine and Healthcare (CIMED2005).

fractal_sevcik()#

- fractal_sevcik(signal)[source]#

Sevcik Fractal Dimension (SFD)

The SFD algorithm was proposed to calculate the fractal dimension of waveforms by Sevcik (1998). This method can be used to quickly measure the complexity and randomness of a signal.

Note

Some papers (e.g., Wang et al. 2017) suggest adding

np.log(2)to the numerator, but it’s unclear why, so we stuck to the original formula for now. But if you have an idea, please let us know!- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

- Returns:

sfd (float) – The sevcik fractal dimension.

info (dict) – An empty dictionary returned for consistency with the other complexity functions.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, frequency=5) In [3]: sfd, _ = nk.fractal_sevcik(signal) In [4]: sfd Out[4]: np.float64(1.361438527611129)

References

Sevcik, C. (2010). A procedure to estimate the fractal dimension of waveforms. arXiv preprint arXiv:1003.5266.

Kumar, D. K., Arjunan, S. P., & Aliahmad, B. (2017). Fractals: applications in biological Signalling and image processing. CRC Press.

Wang, H., Li, J., Guo, L., Dou, Z., Lin, Y., & Zhou, R. (2017). Fractal complexity-based feature extraction algorithm of communication signals. Fractals, 25(04), 1740008.

Goh, C., Hamadicharef, B., Henderson, G., & Ifeachor, E. (2005, June). Comparison of fractal dimension algorithms for the computation of EEG biomarkers for dementia. In 2nd International Conference on Computational Intelligence in Medicine and Healthcare (CIMED2005).

fractal_nld()#

- fractal_nld(signal, corrected=False)[source]#

Fractal dimension via Normalized Length Density (NLDFD)

NLDFD is a very simple index corresponding to the average absolute consecutive differences of the (standardized) signal (

np.mean(np.abs(np.diff(std_signal)))). This method was developed for measuring signal complexity of very short durations (< 30 samples), and can be used for instance when continuous signal FD changes (or “running” FD) are of interest (by computing it on sliding windows, see example).For methods such as Higuchi’s FD, the standard deviation of the window FD increases sharply when the epoch becomes shorter. The NLD method results in lower standard deviation especially for shorter epochs, though at the expense of lower accuracy in average window FD.

See also

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

corrected (bool) – If

True, will rescale the output value according to the power model estimated by Kalauzi et al. (2009) to make it more comparable with “true” FD range, as follows:FD = 1.9079*((NLD-0.097178)^0.18383). Note that this can result innp.nanif the result of the difference is negative.

- Returns:

fd (DataFrame) – A dataframe containing the fractal dimension across epochs.

info (dict) – A dictionary containing additional information (currently, but returned nonetheless for consistency with other functions).

Examples

Example 1: Usage on a short signal

In [1]: import neurokit2 as nk # Simulate a short signal with duration of 0.5s In [2]: signal = nk.signal_simulate(duration=0.5, frequency=[3, 5]) # Compute Fractal Dimension In [3]: fd, _ = nk.fractal_nld(signal, corrected=False) In [4]: fd Out[4]: np.float64(0.023124767861850155)

Example 2: Compute FD-NLD on non-overlapping windows

In [5]: import numpy as np # Simulate a long signal with duration of 5s In [6]: signal = nk.signal_simulate(duration=5, frequency=[3, 5, 10], noise=0.1) # We want windows of size=100 (0.1s) In [7]: n_windows = len(signal) // 100 # How many windows # Split signal into windows In [8]: windows = np.array_split(signal, n_windows) # Compute FD-NLD on all windows In [9]: nld = [nk.fractal_nld(i, corrected=False)[0] for i in windows] In [10]: np.mean(nld) # Get average Out[10]: np.float64(0.6012469171984993)

Example 3: Calculate FD-NLD on sliding windows

# Simulate a long signal with duration of 5s In [11]: signal = nk.signal_simulate(duration=5, frequency=[3, 5, 10], noise=0.1) # Add period of noise In [12]: signal[1000:3000] = signal[1000:3000] + np.random.normal(0, 1, size=2000) # Create function-wrapper that only return the NLD value In [13]: nld = lambda x: nk.fractal_nld(x, corrected=False)[0] # Use them in a rolling window of 100 samples (0.1s) In [14]: rolling_nld = pd.Series(signal).rolling(100, min_periods = 100, center=True).apply(nld) In [15]: nk.signal_plot([signal, rolling_nld], subplots=True, labels=["Signal", "FD-NLD"])

References

Kalauzi, A., Bojić, T., & Rakić, L. (2009). Extracting complexity waveforms from one-dimensional signals. Nonlinear biomedical physics, 3(1), 1-11.

fractal_psdslope()#

- fractal_psdslope(signal, method='voss1988', show=False, **kwargs)[source]#

Fractal dimension via Power Spectral Density (PSD) slope

Fractal exponent can be computed from Power Spectral Density slope (PSDslope) analysis in signals characterized by a frequency power-law dependence.

It first transforms the time series into the frequency domain, and breaks down the signal into sine and cosine waves of a particular amplitude that together “add-up” to represent the original signal. If there is a systematic relationship between the frequencies in the signal and the power of those frequencies, this will reveal itself in log-log coordinates as a linear relationship. The slope of the best fitting line is taken as an estimate of the fractal scaling exponent and can be converted to an estimate of the fractal dimension.

A slope of 0 is consistent with white noise, and a slope of less than 0 but greater than -1, is consistent with pink noise i.e., 1/f noise. Spectral slopes as steep as -2 indicate fractional Brownian motion, the epitome of random walk processes.

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

method (str) – Method to estimate the fractal dimension from the slope, can be

"voss1988"(default) or"hasselman2013".show (bool) – If True, returns the log-log plot of PSD versus frequency.

**kwargs – Other arguments to be passed to

signal_psd()(such asmethod).

- Returns:

slope (float) – Estimate of the fractal dimension obtained from PSD slope analysis.

info (dict) – A dictionary containing additional information regarding the parameters used to perform PSD slope analysis.

Examples

In [1]: import neurokit2 as nk # Simulate a Signal with Laplace Noise In [2]: signal = nk.signal_simulate(duration=2, sampling_rate=200, frequency=[5, 6], noise=0.5) # Compute the Fractal Dimension from PSD slope In [3]: psdslope, info = nk.fractal_psdslope(signal, show=True)

In [4]: psdslope Out[4]: np.float64(2.513771973465163)

References

https://complexity-methods.github.io/book/power-spectral-density-psd-slope.html

Hasselman, F. (2013). When the blind curve is finite: dimension estimation and model inference based on empirical waveforms. Frontiers in Physiology, 4, 75. https://doi.org/10.3389/fphys.2013.00075

Voss, R. F. (1988). Fractals in nature: From characterization to simulation. The Science of Fractal Images, 21-70.

Eke, A., Hermán, P., Kocsis, L., and Kozak, L. R. (2002). Fractal characterization of complexity in temporal physiological signals. Physiol. Meas. 23, 1-38.

fractal_higuchi()#

- fractal_higuchi(signal, k_max='default', show=False, **kwargs)[source]#

Higuchi’s Fractal Dimension (HFD)

The Higuchi’s Fractal Dimension (HFD) is an approximate value for the box-counting dimension for time series. It is computed by reconstructing k-max number of new data sets. For each reconstructed data set, curve length is computed and plotted against its corresponding k-value on a log-log scale. HFD corresponds to the slope of the least-squares linear trend.

Values should fall between 1 and 2. For more information about the k parameter selection, see the

complexity_k()optimization function.- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

k_max (str or int) – Maximum number of interval times (should be greater than or equal to 2). If

"default", the optimal k-max is estimated usingcomplexity_k(), which is slow.show (bool) – Visualise the slope of the curve for the selected k_max value.

**kwargs (optional) – Currently not used.

- Returns:

HFD (float) – Higuchi’s fractal dimension of the time series.

info (dict) – A dictionary containing additional information regarding the parameters used to compute Higuchi’s fractal dimension.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=1, sampling_rate=100, frequency=[3, 6], noise = 0.2) In [3]: k_max, info = nk.complexity_k(signal, k_max='default', show=True) In [4]: hfd, info = nk.fractal_higuchi(signal, k_max=k_max, show=True)

In [5]: hfd Out[5]: np.float64(1.9697823793316376)

References

Higuchi, T. (1988). Approach to an irregular time series on the basis of the fractal theory. Physica D: Nonlinear Phenomena, 31(2), 277-283.

Vega, C. F., & Noel, J. (2015, June). Parameters analyzed of Higuchi’s fractal dimension for EEG brain signals. In 2015 Signal Processing Symposium (SPSympo) (pp. 1-5). IEEE. https://ieeexplore.ieee.org/document/7168285

fractal_density()#

- fractal_density(signal, delay=1, tolerance='sd', bins=None, show=False, **kwargs)[source]#

Density Fractal Dimension (DFD)

This is a Work in Progress (WIP). The idea is to find a way of, essentially, averaging attractors. Because one can not directly average the trajectories, one way is to convert the attractor to a 2D density matrix that we can use similarly to a time-frequency heatmap. However, it is very unclear how to then derive meaningful indices from this density plot. Also, how many bins, or smoothing, should one use?

Basically, this index is exploratory and should not be used in its state. However, if you’re interested in the problem of “average” attractors (e.g., from multiple epochs / trials), and you want to think about it with us, feel free to let us know!

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as lag) in samples. See

complexity_delay()to estimate the optimal value for this parameter.tolerance (float) – Tolerance (often denoted as r), distance to consider two data points as similar. If

"sd"(default), will be set to \(0.2 * SD_{signal}\). Seecomplexity_tolerance()to estimate the optimal value for this parameter.bins (int) – If not

Nonebut an integer, will use this value for the number of bins instead of a value based on thetoleranceparameter.show (bool) – Plot the density matrix. Defaults to

False.**kwargs – Other arguments to be passe.

- Returns:

dfd (float) – The density fractal dimension.

info (dict) – A dictionary containing additional information.

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, frequency=[5, 9], noise=0.01) In [3]: dfd, _ = nk.fractal_density(signal, delay=20, show=True)

In [4]: signal = nk.signal_simulate(duration=4, frequency=[5, 10, 11], noise=0.01) In [5]: epochs = nk.epochs_create(signal, events=20) In [6]: dfd, info1 = nk.fractal_density(epochs, delay=20, bins=20, show=True)

Compare the complexity of two signals.

Warning

Help is needed to find a way to make statistics and comparing two density maps.

In [7]: import matplotlib.pyplot as plt In [8]: sig2 = nk.signal_simulate(duration=4, frequency=[4, 12, 14], noise=0.01) In [9]: epochs2 = nk.epochs_create(sig2, events=20) In [10]: dfd, info2 = nk.fractal_density(epochs2, delay=20, bins=20) # Difference between two density maps In [11]: D = info1["Average"] - info2["Average"] In [12]: plt.imshow(nk.standardize(D), cmap='RdBu') Out[12]: <matplotlib.image.AxesImage at 0x7fa8b1b07c50>

fractal_hurst()#

- fractal_hurst(signal, scale='default', corrected=True, show=False)[source]#

Hurst Exponent (H)

This function estimates the Hurst exponent via the standard rescaled range (R/S) approach, but other methods exist, such as Detrended Fluctuation Analysis (DFA, see

fractal_dfa()).The Hurst exponent is a measure for the “long-term memory” of a signal. It can be used to determine whether the time series is more, less, or equally likely to increase if it has increased in previous steps. This property makes the Hurst exponent especially interesting for the analysis of stock data. It typically ranges from 0 to 1, with 0.5 corresponding to a Brownian motion. If H < 0.5, the time-series covers less “distance” than a random walk (the memory of the signal decays faster than at random), and vice versa.

The R/S approach first splits the time series into non-overlapping subseries of length n. R and S (sigma) are then calculated for each subseries and the mean is taken over all subseries yielding (R/S)_n. This process is repeated for several lengths n. The final exponent is then derived from fitting a straight line to the plot of \(log((R/S)_n)\) vs \(log(n)\).

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values. or dataframe.

scale (list) – A list containing the lengths of the windows (number of data points in each subseries) that the signal is divided into. See

fractal_dfa()for more information.corrected (boolean) – if

True, the Anis-Lloyd-Peters correction factor will be applied to the output according to the expected value for the individual (R/S) values.show (bool) – If

True, returns a plot.

See also

Examples

In [1]: import neurokit2 as nk # Simulate Signal with duration of 2s In [2]: signal = nk.signal_simulate(duration=2, frequency=5) # Compute Hurst Exponent In [3]: h, info = nk.fractal_hurst(signal, corrected=True, show=True) In [4]: h Out[4]: np.float64(0.9654065297154627)

References

Brandi, G., & Di Matteo, T. (2021). On the statistics of scaling exponents and the Multiscaling Value at Risk. The European Journal of Finance, 1-22.

Annis, A. A., & Lloyd, E. H. (1976). The expected value of the adjusted rescaled Hurst range of independent normal summands. Biometrika, 63(1), 111-116.

fractal_correlation()#

- fractal_correlation(signal, delay=1, dimension=2, radius=64, show=False, **kwargs)[source]#

Correlation Dimension (CD)

The Correlation Dimension (CD, also denoted D2) is a lower bound estimate of the fractal dimension of a signal.

The time series is first

time-delay embedded, and distances between all points in the trajectory are calculated. The “correlation sum” is then computed, which is the proportion of pairs of points whose distance is smaller than a given radius. The final correlation dimension is then approximated by a log-log graph of correlation sum vs. a sequence of radiuses.This function can be called either via

fractal_correlation()orcomplexity_cd().- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as lag) in samples. See

complexity_delay()to estimate the optimal value for this parameter.dimension (int) – Embedding Dimension (m, sometimes referred to as d or order). See

complexity_dimension()to estimate the optimal value for this parameter.radius (Union[str, int, list]) – The sequence of radiuses to test. If an integer is passed, will get an exponential sequence of length

radiusranging from 2.5% to 50% of the distance range. Methods implemented in other packages can be used via"nolds","Corr_Dim"or"boon2008".show (bool) – Plot of correlation dimension if

True. Defaults toFalse.**kwargs – Other arguments to be passed (not used for now).

- Returns:

cd (float) – The Correlation Dimension (CD) of the time series.

info (dict) – A dictionary containing additional information regarding the parameters used to compute the correlation dimension.

Examples

For some completely unclear reasons, uncommenting the following examples messes up the figures path of all the subsequent documented function. So, commenting it for now.

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=1, frequency=[10, 14], noise=0.1) # @savefig p_fractal_correlation1.png scale=100% # cd, info = nk.fractal_correlation(signal, radius=32, show=True) # @suppress # plt.close()

# @savefig p_fractal_correlation2.png scale=100% # cd, info = nk.fractal_correlation(signal, radius="nolds", show=True) # @suppress # plt.close()

# @savefig p_fractal_correlation3.png scale=100% # cd, info = nk.fractal_correlation(signal, radius='boon2008', show=True) # @suppress # plt.close()

References

Bolea, J., Laguna, P., Remartínez, J. M., Rovira, E., Navarro, A., & Bailón, R. (2014). Methodological framework for estimating the correlation dimension in HRV signals. Computational and mathematical methods in medicine, 2014.

Boon, M. Y., Henry, B. I., Suttle, C. M., & Dain, S. J. (2008). The correlation dimension: A useful objective measure of the transient visual evoked potential?. Journal of vision, 8(1), 6-6.

fractal_dfa()#

- fractal_dfa(signal, scale='default', overlap=True, integrate=True, order=1, multifractal=False, q='default', maxdfa=False, show=False, **kwargs)[source]#

(Multifractal) Detrended Fluctuation Analysis (DFA or MFDFA)

Detrended fluctuation analysis (DFA) is used to find long-term statistical dependencies in time series.

For monofractal DFA, the output alpha \(\alpha\) corresponds to the slope of the linear trend between the scale factors and the fluctuations. For multifractal DFA, the slope values under different q values are actually generalised Hurst exponents h. Monofractal DFA corresponds to MFDFA with q = 2, and its output is actually an estimation of the Hurst exponent (\(h_{(2)}\)).

The Hurst exponent is the measure of long range autocorrelation of a signal, and \(h_{(2)} > 0.5\) suggests the presence of long range correlation, while \(h_{(2)} < 0.5`suggests short range correlations. If :math:`h_{(2)} = 0.5\), it indicates uncorrelated indiscriminative fluctuations, i.e. a Brownian motion.

Multifractal DFA returns the generalised Hurst exponents h for different values of q. It is converted to the multifractal scaling exponent Tau \(\tau_{(q)}\), which non-linear relationship with q can indicate multifractility. From there, we derive the singularity exponent H (or \(\alpha\)) (also known as Hölder’s exponents) and the singularity dimension D (or \(f(\alpha)\)). The variation of D with H is known as multifractal singularity spectrum (MSP), and usually has shape of an inverted parabola. It measures the long range correlation property of a signal. From these elements, different features are extracted:

Width: The width of the singularity spectrum, which quantifies the degree of the multifractality. In the case of monofractal signals, the MSP width is zero, since h(q) is independent of q.

Peak: The value of the singularity exponent H corresponding to the peak of singularity dimension D. It is a measure of the self-affinity of the signal, and a high value is an indicator of high degree of correlation between the data points. In the other words, the process is recurrent and repetitive.

Mean: The mean of the maximum and minimum values of singularity exponent H, which quantifies the average fluctuations of the signal.

Max: The value of singularity spectrum D corresponding to the maximum value of singularity exponent H, which indicates the maximum fluctuation of the signal.

Delta: the vertical distance between the singularity spectrum D where the singularity exponents are at their minimum and maximum. Corresponds to the range of fluctuations of the signal.

Asymmetry: The Asymmetric Ratio (AR) corresponds to the centrality of the peak of the spectrum. AR = 0.5 indicates that the multifractal spectrum is symmetric (Orozco-Duque et al., 2015).

Fluctuation: The h-fluctuation index (hFI) is defined as the power of the second derivative of h(q). See Orozco-Duque et al. (2015).

Increment: The cumulative function of the squared increments (\(\alpha CF\)) of the generalized Hurst’s exponents between consecutive moment orders is a more robust index of the distribution of the generalized Hurst’s exponents (Faini et al., 2021).

This function can be called either via

fractal_dfa()orcomplexity_dfa(), and its multifractal variant can be directly accessed viafractal_mfdfa()orcomplexity_mfdfa().Note

Help is needed to implement the modified formula to compute the slope when q = 0. See for instance Faini et al. (2021). See LRydin/MFDFA#33

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

scale (list) – A list containing the lengths of the windows (number of data points in each subseries) that the signal is divided into. Also referred to as Tau \(\tau\). If

"default", will set it to a logarithmic scale (so that each window scale has the same weight) with a minimum of 4 and maximum of a tenth of the length (to have more than 10 windows to calculate the average fluctuation).overlap (bool) – Defaults to

True, where the windows will have a 50% overlap with each other, otherwise non-overlapping windows will be used.integrate (bool) – It is common practice to convert the signal to a random walk (i.e., detrend and integrate, which corresponds to the signal ‘profile’) in order to avoid having too small exponent values. Note that it leads to the flattening of the signal, which can lead to the loss of some details (see Ihlen, 2012 for an explanation). Note that for strongly anticorrelated signals, this transformation should be applied two times (i.e., provide

np.cumsum(signal - np.mean(signal))instead ofsignal).order (int) – The order of the polynomial trend for detrending. 1 corresponds to a linear detrending.

multifractal (bool) – If

True, compute Multifractal Detrended Fluctuation Analysis (MFDFA), in which case the argumentqis taken into account.q (Union[int, list, np.array]) – The sequence of fractal exponents when

multifractal=True. Must be a sequence between -10 and 10 (note that zero will be removed, since the code does not converge there). Settingq = 2(default for DFA) gives a result of a standard DFA. For instance, Ihlen (2012) usesq = [-5, -3, -1, 0, 1, 3, 5](default when for multifractal). In general, positive q moments amplify the contribution of fractal components with larger amplitude and negative q moments amplify the contribution of fractal with smaller amplitude (Kantelhardt et al., 2002).maxdfa (bool) – If

True, it will locate the knee of the fluctuations (usingfind_knee()) and use that as a maximum scale value. It computes max. DFA (a similar method exists inentropy_rate()).show (bool) – Visualise the trend between the window size and the fluctuations.

**kwargs (optional) – Currently not used.

- Returns:

dfa (float or pd.DataFrame) – If

multifractalisFalse, one DFA value is returned for a single time series.parameters (dict) – A dictionary containing additional information regarding the parameters used to compute DFA. If

multifractalisFalse, the dictionary contains q value, a series of windows, fluctuations of each window and the slopes value of the log2(windows) versus log2(fluctuations) plot. IfmultifractalisTrue, the dictionary additionally contains the parameters of the singularity spectrum.

See also

Examples

Example 1: Monofractal DFA

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=10, frequency=[5, 7, 10, 14], noise=0.05) In [3]: dfa, info = nk.fractal_dfa(signal, show=True)

In [4]: dfa Out[4]: np.float64(0.6522425325867764)

As we can see from the plot, the final value, corresponding to the slope of the red line, doesn’t capture properly the relationship. We can adjust the scale factors to capture the fractality of short-term fluctuations.

In [5]: scale = nk.expspace(10, 100, 20, base=2) In [6]: dfa, info = nk.fractal_dfa(signal, scale=scale, show=True)

Example 2: Multifractal DFA (MFDFA)

In [7]: mfdfa, info = nk.fractal_mfdfa(signal, q=[-5, -3, -1, 0, 1, 3, 5], show=True)

In [8]: mfdfa Out[8]: Width Peak Mean ... Asymmetry Fluctuation Increment 0 0.832351 0.777085 0.887995 ... -0.366751 0.000266 0.041385 [1 rows x 8 columns]

References

Faini, A., Parati, G., & Castiglioni, P. (2021). Multiscale assessment of the degree of multifractality for physiological time series. Philosophical Transactions of the Royal Society A, 379(2212), 20200254.

Orozco-Duque, A., Novak, D., Kremen, V., & Bustamante, J. (2015). Multifractal analysis for grading complex fractionated electrograms in atrial fibrillation. Physiological Measurement, 36(11), 2269-2284.

Ihlen, E. A. F. E. (2012). Introduction to multifractal detrended fluctuation analysis in Matlab. Frontiers in physiology, 3, 141.

Kantelhardt, J. W., Zschiegner, S. A., Koscielny-Bunde, E., Havlin, S., Bunde, A., & Stanley, H. E. (2002). Multifractal detrended fluctuation analysis of nonstationary time series. Physica A: Statistical Mechanics and its Applications, 316(1-4), 87-114.

Hardstone, R., Poil, S. S., Schiavone, G., Jansen, R., Nikulin, V. V., Mansvelder, H. D., & Linkenkaer-Hansen, K. (2012). Detrended fluctuation analysis: a scale-free view on neuronal oscillations. Frontiers in physiology, 3, 450.

fractal_tmf()#

- fractal_tmf(signal, n=40, show=False, **kwargs)[source]#

Multifractal Nonlinearity (tMF)

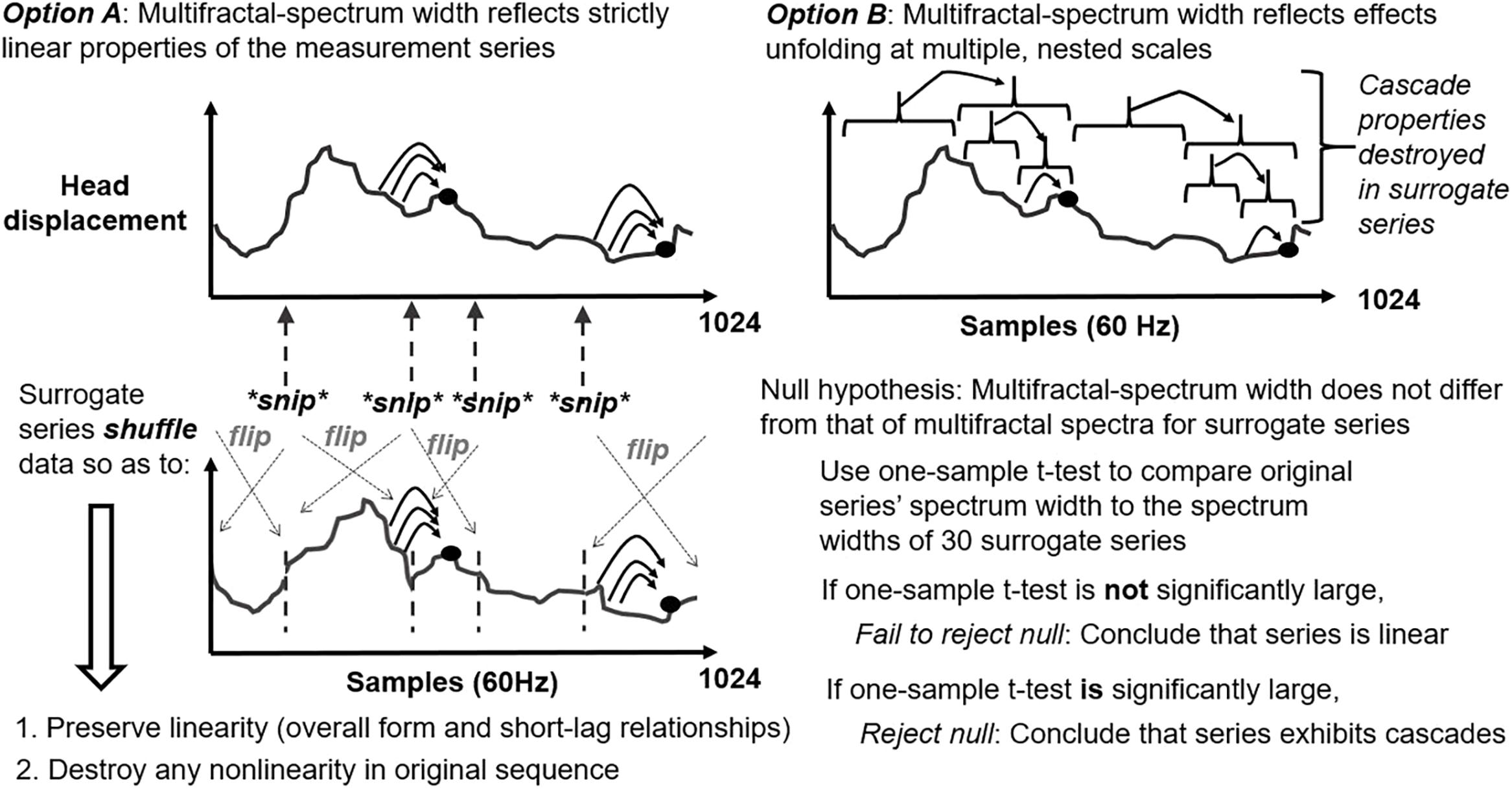

The Multifractal Nonlinearity index (tMF) is the t-value resulting from the comparison of the multifractality of the signal (measured by the spectrum width, see

fractal_dfa()) with the multifractality of linearizedsurrogatesobtained by the IAAFT method (i.e., reshuffled series with comparable linear structure).This statistics grows larger the more the original series departs from the multifractality attributable to the linear structure of IAAFT surrogates. When p-value reaches significance, we can conclude that the signal’s multifractality encodes processes that a linear contingency cannot.

This index provides an extension of the assessment of multifractality, of which the multifractal spectrum is by itself a measure of heterogeneity, rather than interactivity. As such, it cannot alone be used to assess the specific presence of cascade-like interactivity in the time series, but must be compared to the spectrum of a sample of its surrogates.

Both significantly negative and positive values can indicate interactivity, as any difference from the linear structure represented by the surrogates is an indication of nonlinear contingence. Indeed, if the degree of heterogeneity for the original series is significantly less than for the sample of linear surrogates, that is no less evidence of a failure of linearity than if the degree of heterogeneity is significantly greater.

Note

Help us review the implementation of this index by checking-it out and letting us know wether it is correct or not.

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

n (int) – Number of surrogates. The literature uses values between 30 and 40.

**kwargs (optional) – Other arguments to be passed to

fractal_dfa().

- Returns:

float – tMF index.

info (dict) – A dictionary containing additional information, such as the p-value.

See also

Examples

In [1]: import neurokit2 as nk # Simulate a Signal In [2]: signal = nk.signal_simulate(duration=1, sampling_rate=200, frequency=[5, 6, 12], noise=0.2) # Compute tMF In [3]: tMF, info = nk.fractal_tmf(signal, n=100, show=True)

In [4]: tMF # t-value Out[4]: np.float64(9.977836078771592) In [5]: info["p"] # p-value Out[5]: np.float64(1.2228022908024259e-16)

References

Ihlen, E. A., & Vereijken, B. (2013). Multifractal formalisms of human behavior. Human movement science, 32(4), 633-651.

Kelty-Stephen, D. G., Palatinus, K., Saltzman, E., & Dixon, J. A. (2013). A tutorial on multifractality, cascades, and interactivity for empirical time series in ecological science. Ecological Psychology, 25(1), 1-62.

Bell, C. A., Carver, N. S., Zbaracki, J. A., & Kelty-Stephen, D. G. (2019). Non-linear amplification of variability through interaction across scales supports greater accuracy in manual aiming: evidence from a multifractal analysis with comparisons to linear surrogates in the fitts task. Frontiers in physiology, 10, 998.

Entropy#

entropy_shannon()#

- entropy_shannon(signal=None, base=2, symbolize=None, show=False, freq=None, **kwargs)[source]#

Shannon entropy (SE or ShanEn)

Compute Shannon entropy (SE). Entropy is a measure of unpredictability of the state, or equivalently, of its average information content. Shannon entropy (SE) is one of the first and most basic measures of entropy and a foundational concept of information theory, introduced by Shannon (1948) to quantify the amount of information in a variable.

\[ShanEn = -\sum_{x \in \mathcal{X}} p(x) \log_2 p(x)\]Shannon attempted to extend Shannon entropy in what has become known as Differential Entropy (see

entropy_differential()).Because Shannon entropy was meant for symbolic sequences (discrete events such as [“A”, “B”, “B”, “A”]), it does not do well with continuous signals. One option is to binarize (i.e., cut) the signal into a number of bins using for instance

pd.cut(signal, bins=100, labels=False). This can be done automatically using themethodargument, which will be transferred tocomplexity_symbolize().This function can be called either via

entropy_shannon()orcomplexity_se().- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

base (float) – The logarithmic base to use, defaults to

2, giving a unit in bits. Note thatscipy. stats.entropy()uses Euler’s number (np.e) as default (the natural logarithm), giving a measure of information expressed in nats.symbolize (str) – Method to convert a continuous signal input into a symbolic (discrete) signal.

Noneby default, which skips the process (and assumes the input is already discrete). Seecomplexity_symbolize()for details.show (bool) – If

True, will show the discrete the signal.freq (np.array) – Instead of a signal, a vector of probabilities can be provided (used for instance in

entropy_permutation()).**kwargs – Optional arguments. Not used for now.

- Returns:

shanen (float) – The Shannon entropy of the signal.

info (dict) – A dictionary containing additional information regarding the parameters used to compute Shannon entropy.

See also

entropy_differential,entropy_cumulativeresidual,entropy_tsallis,entropy_renyi,entropy_maximumExamples

In [1]: import neurokit2 as nk In [2]: signal = [1, 1, 5, 5, 2, 8, 1] In [3]: _, freq = np.unique(signal, return_counts=True) In [4]: nk.entropy_shannon(freq=freq) Out[4]: (np.float64(1.8423709931771086), {'Symbolization': None, 'Base': 2})

# Simulate a Signal with Laplace Noise In [5]: signal = nk.signal_simulate(duration=2, frequency=5, noise=0.01) # Compute Shannon's Entropy In [6]: shanen, info = nk.entropy_shannon(signal, symbolize=3, show=True)

In [7]: shanen Out[7]: np.float64(1.5468851844151934)

Compare with

scipy(using the same base).In [8]: import scipy.stats # Make the binning ourselves In [9]: binned = pd.cut(signal, bins=3, labels=False) In [10]: scipy.stats.entropy(pd.Series(binned).value_counts()) Out[10]: np.float64(1.0722191042273421) In [11]: shanen, info = nk.entropy_shannon(binned, base=np.e) In [12]: shanen Out[12]: np.float64(1.0722191042273423)

References

Shannon, C. E. (1948). A mathematical theory of communication. The Bell system technical journal, 27(3), 379-423.

entropy_maximum()#

- entropy_maximum(signal)[source]#

Maximum Entropy (MaxEn)

Provides an upper bound for the entropy of a random variable, so that the empirical entropy (obtained for instance with

entropy_shannon()) will lie in between 0 and max. entropy.It can be useful to normalize the empirical entropy by the maximum entropy (which is made by default in some algorithms).

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

- Returns:

maxen (float) – The maximum entropy of the signal.

info (dict) – An empty dictionary returned for consistency with the other complexity functions.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = [1, 1, 5, 5, 2, 8, 1] In [3]: maxen, _ = nk.entropy_maximum(signal) In [4]: maxen Out[4]: np.float64(2.0)

entropy_differential()#

- entropy_differential(signal, base=2, **kwargs)[source]#

Differential entropy (DiffEn)

Differential entropy (DiffEn; also referred to as continuous entropy) started as an attempt by Shannon to extend Shannon entropy. However, differential entropy presents some issues too, such as that it can be negative even for simple distributions (such as the uniform distribution).

This function can be called either via

entropy_differential()orcomplexity_diffen().- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

base (float) – The logarithmic base to use, defaults to

2, giving a unit in bits. Note thatscipy. stats.entropy()uses Euler’s number (np.e) as default (the natural logarithm), giving a measure of information expressed in nats.**kwargs (optional) – Other arguments passed to

scipy.stats.differential_entropy().

- Returns:

diffen (float) – The Differential entropy of the signal.

info (dict) – A dictionary containing additional information regarding the parameters used to compute Differential entropy.

See also

Examples

In [1]: import neurokit2 as nk # Simulate a Signal with Laplace Noise In [2]: signal = nk.signal_simulate(duration=2, frequency=5, noise=0.1) # Compute Differential Entropy In [3]: diffen, info = nk.entropy_differential(signal) In [4]: diffen Out[4]: np.float64(0.5408771949514075)

References

entropy_power()#

- entropy_power(signal, **kwargs)[source]#

Entropy Power (PowEn)

The Shannon Entropy Power (PowEn or SEP) is a measure of the effective variance of a random vector. It is based on the estimation of the density of the variable, thus relying on

density().Warning

We are not sure at all about the correct implementation of this function. Please consider helping us by double-checking the code against the formulas in the references.

- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

**kwargs – Other arguments to be passed to

density_bandwidth().

- Returns:

powen (float) – The computed entropy power measure.

info (dict) – A dictionary containing additional information regarding the parameters used.

See also

information_fisershannonExamples

In [1]: import neurokit2 as nk In [2]: import matplotlib.pyplot as plt In [3]: signal = nk.signal_simulate(duration=10, frequency=[10, 12], noise=0.1) In [4]: powen, info = nk.entropy_power(signal) In [5]: powen Out[5]: np.float64(0.05855919773025029) # Visualize the distribution that the entropy power is based on In [6]: plt.plot(info["Values"], info["Density"]) Out[6]: [<matplotlib.lines.Line2D at 0x7fa8b1f0f390>]

Change density bandwidth.

In [7]: powen, info = nk.entropy_power(signal, bandwidth=0.01) In [8]: powen Out[8]: np.float64(0.05855892943937228)

References

Guignard, F., Laib, M., Amato, F., & Kanevski, M. (2020). Advanced analysis of temporal data using Fisher-Shannon information: theoretical development and application in geosciences. Frontiers in Earth Science, 8, 255.

Vignat, C., & Bercher, J. F. (2003). Analysis of signals in the Fisher-Shannon information plane. Physics Letters A, 312(1-2), 27-33.

Dembo, A., Cover, T. M., & Thomas, J. A. (1991). Information theoretic inequalities. IEEE Transactions on Information theory, 37(6), 1501-1518.

entropy_tsallis()#

- entropy_tsallis(signal=None, q=1, symbolize=None, show=False, freq=None, **kwargs)[source]#

Tsallis entropy (TSEn)

Tsallis Entropy is an extension of

Shannon entropyto the case where entropy is nonextensive. It is similarly computed from a vector of probabilities of different states. Because it works on discrete inputs (e.g., [A, B, B, A, B]), it requires to transform the continuous signal into a discrete one.\[TSEn = \frac{1}{q - 1} \left( 1 - \sum_{x \in \mathcal{X}} p(x)^q \right)\]- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

q (float) – Tsallis’s q parameter, sometimes referred to as the entropic-index (default to 1).

symbolize (str) – Method to convert a continuous signal input into a symbolic (discrete) signal.

Noneby default, which skips the process (and assumes the input is already discrete). Seecomplexity_symbolize()for details.show (bool) – If

True, will show the discrete the signal.freq (np.array) – Instead of a signal, a vector of probabilities can be provided.

**kwargs – Optional arguments. Not used for now.

- Returns:

tsen (float) – The Tsallis entropy of the signal.

info (dict) – A dictionary containing additional information regarding the parameters used.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = [1, 3, 3, 2, 6, 6, 6, 1, 0] In [3]: tsen, _ = nk.entropy_tsallis(signal, q=1) In [4]: tsen Out[4]: np.float64(1.5229550675313184) In [5]: shanen, _ = nk.entropy_shannon(signal, base=np.e) In [6]: shanen Out[6]: np.float64(1.5229550675313184)

References

Tsallis, C. (2009). Introduction to nonextensive statistical mechanics: approaching a complex world. Springer, 1(1), 2-1.

entropy_renyi()#

- entropy_renyi(signal=None, alpha=1, symbolize=None, show=False, freq=None, **kwargs)[source]#

Rényi entropy (REn or H)

In information theory, the Rényi entropy H generalizes the Hartley entropy, the Shannon entropy, the collision entropy and the min-entropy.

\(\alpha = 0\): the Rényi entropy becomes what is known as the Hartley entropy.

\(\alpha = 1\): the Rényi entropy becomes the :func:`Shannon entropy <entropy_shannon>`.

\(\alpha = 2\): the Rényi entropy becomes the collision entropy, which corresponds to the surprisal of “rolling doubles”.

It is mathematically defined as:

\[REn = \frac{1}{1-\alpha} \log_2 \left( \sum_{x \in \mathcal{X}} p(x)^\alpha \right)\]- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

alpha (float) – The alpha \(\alpha\) parameter (default to 1) for Rényi entropy.

symbolize (str) – Method to convert a continuous signal input into a symbolic (discrete) signal.

Noneby default, which skips the process (and assumes the input is already discrete). Seecomplexity_symbolize()for details.show (bool) – If

True, will show the discrete the signal.freq (np.array) – Instead of a signal, a vector of probabilities can be provided.

**kwargs – Optional arguments. Not used for now.

- Returns:

ren (float) – The Tsallis entropy of the signal.

info (dict) – A dictionary containing additional information regarding the parameters used.

See also

Examples

In [1]: import neurokit2 as nk In [2]: signal = [1, 3, 3, 2, 6, 6, 6, 1, 0] In [3]: tsen, _ = nk.entropy_renyi(signal, alpha=1) In [4]: tsen Out[4]: np.float64(1.5229550675313184) # Compare to Shannon function In [5]: shanen, _ = nk.entropy_shannon(signal, base=np.e) In [6]: shanen Out[6]: np.float64(1.5229550675313184) # Hartley Entropy In [7]: nk.entropy_renyi(signal, alpha=0)[0] Out[7]: np.float64(1.6094379124341003) # Collision Entropy In [8]: nk.entropy_renyi(signal, alpha=2)[0] Out[8]: np.float64(1.4500101755059984)

References

Rényi, A. (1961, January). On measures of entropy and information. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics (Vol. 4, pp. 547-562). University of California Press.

entropy_approximate()#

- entropy_approximate(signal, delay=1, dimension=2, tolerance='sd', corrected=False, **kwargs)[source]#

Approximate entropy (ApEn) and its corrected version (cApEn)

Approximate entropy is a technique used to quantify the amount of regularity and the unpredictability of fluctuations over time-series data. The advantages of ApEn include lower computational demand (ApEn can be designed to work for small data samples (< 50 data points) and can be applied in real time) and less sensitive to noise. However, ApEn is heavily dependent on the record length and lacks relative consistency.

This function can be called either via

entropy_approximate()orcomplexity_apen(), and the corrected version viacomplexity_capen().- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as lag) in samples. See

complexity_delay()to estimate the optimal value for this parameter.dimension (int) – Embedding Dimension (m, sometimes referred to as d or order). See

complexity_dimension()to estimate the optimal value for this parameter.tolerance (float) – Tolerance (often denoted as r), distance to consider two data points as similar. If

"sd"(default), will be set to \(0.2 * SD_{signal}\). Seecomplexity_tolerance()to estimate the optimal value for this parameter.corrected (bool) – If true, will compute corrected ApEn (cApEn), see Porta (2007).

**kwargs – Other arguments.

See also

- Returns:

apen (float) – The approximate entropy of the single time series.

info (dict) – A dictionary containing additional information regarding the parameters used to compute approximate entropy.

Examples

In [1]: import neurokit2 as nk In [2]: signal = nk.signal_simulate(duration=2, frequency=5) In [3]: apen, parameters = nk.entropy_approximate(signal) In [4]: apen Out[4]: np.float64(0.08837414074679684) In [5]: capen, parameters = nk.entropy_approximate(signal, corrected=True) In [6]: capen Out[6]: np.float64(0.08907775138332998)

References

Sabeti, M., Katebi, S., & Boostani, R. (2009). Entropy and complexity measures for EEG signal classification of schizophrenic and control participants. Artificial intelligence in medicine, 47(3), 263-274.

Shi, B., Zhang, Y., Yuan, C., Wang, S., & Li, P. (2017). Entropy analysis of short-term heartbeat interval time series during regular walking. Entropy, 19(10), 568.

entropy_sample()#

- entropy_sample(signal, delay=1, dimension=2, tolerance='sd', **kwargs)[source]#

Sample Entropy (SampEn)

Compute the sample entropy (SampEn) of a signal. SampEn is a modification of ApEn used for assessing complexity of physiological time series signals. It corresponds to the conditional probability that two vectors that are close to each other for m dimensions will remain close at the next m + 1 component.

This function can be called either via

entropy_sample()orcomplexity_sampen().- Parameters:

signal (Union[list, np.array, pd.Series]) – The signal (i.e., a time series) in the form of a vector of values.

delay (int) – Time delay (often denoted Tau \(\tau\), sometimes referred to as lag) in samples. See